With AI search changing the face of how consumers search online, Large Language Models (LLMs) are rapidly becoming a crucial source of information for SEOs.

Just as we track keywords in traditional search, understanding your performance in AI search is essential, guiding us towards which content performs best in AI results - and more importantly, why the content performs better than others.

What is LLM Tracking and Why Does it Matter?

LLM tracking allows us to monitor your brand's performance across key AI search platforms, such as ChatGPT, Perplexity, Google AI Overviews, and Google's AI Mode.

Similar to keyword tracking, we continually monitor a set of targeted "prompts" over time. This lets us understand from a data perspective where your brand is succeeding (or where it's not) and where your competitors are outperforming you.

A Prompt is simply the search query you want to track. For instance, it could be, "What’s the best travel provider for a cheap holiday to Spain?". The more prompts we track, the more comprehensive and reliable the data we have to review.

Key Metrics for Tracking Performance In AI Search

When looking to understand how well you’re performing in AI search, and whether AI optimisation is the right tactic for you, you first need to understand what metrics we can track and what they mean.

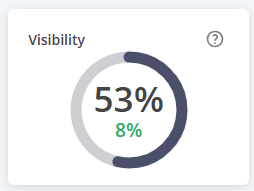

Visibility

This measures how often your tracked website is mentioned or shown in the specific prompts you have chosen to monitor.

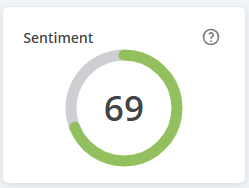

Sentiment

This provides an average score that measures whether your brand is being portrayed in a positive or negative light across all tracked prompts.

For example, you might achieve high visibility by being displayed as a result, but if the LLM states that your “customer service is terrible,” that would lead to a negative sentiment score.

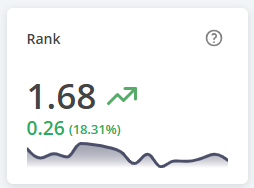

Rank (or Position)

This metric shows your brand's average position across all tracked prompts.

When an LLM mentions multiple brands in its response, they are usually listed in order of priority.

Rank tracking helps you understand how well LLMs are prioritising your brand in comparison to your competitors.

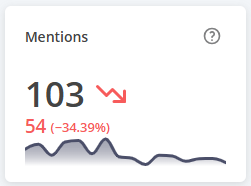

Mentions

This is the total number of times your tracked website has been explicitly mentioned in the monitored prompts.

This number can be higher than the number of prompts you track if your brand is mentioned multiple times within a single LLM response.

Citations

This indicates the number of times a web page on your domain is being used by the LLM as a source or reference to a prompt that’s being tracked.

Monitoring Performance Over Time

Now that we understand what each metric means, it’s time to put this into practice.

Individual metrics are useful, but their true value comes from monitoring them over time to understand your performance trajectory and, most importantly, compare it against your competitors.

At Reflect Digital, we’re able to provide clients with access to an interactive Looker Studio report that showcases performance in real time.

- View graphs tracking metrics like Visibility, Rank, and Sentiment over time.

- Adjust the timeline from daily views to weekly, monthly, quarterly, year-to-date, or all-time performance.

- Filter this historical data by a specific LLM (e.g. ChatGPT) to see performance on specific platforms.

Analysing Individual Prompts

Just tracking performance isn’t good enough; we need to dive into the data to better understand where we’re performing well and where AI optimisation is required.

This is where we need to conduct analysis at a prompt-by-prompt level.

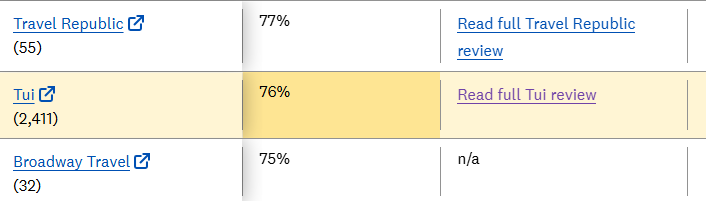

For example, when examining the prompt: "Most reliable package holiday companies in Britain", we can see the following data from our rank tracking tool.

Note, for the purposes of this article, we’re looking at the travel brand, TUI.

|

LLM Tool |

Rank |

Sentiment |

Key Takeaway |

|---|---|---|---|

|

ChatGPT |

#1 |

86/100 |

The brand is referenced as the highest performing with high positive sentiment - great news! |

|

Perplexity |

6th |

72/100 |

The brand is mentioned, but appears far down the list with lower customer ratings, suggesting a need for further investigation. |

|

AI Mode |

Not Listed |

N/A |

The brand isn't listed in the response whatsoever, clearly indicating a performance gap that requires urgent investigation. |

Looking at performance in different LLMs at a prompt level means we can understand where performance is lacking (specifically in Google’s AI Mode, where the brand isn’t ranking at all), and start to investigate the ‘why’.

Identifying and Assessing Source Data

As part of our AI rank tracking, we can also investigate the Sources an LLM uses to generate its responses. This is critical because a positive or negative statement about your brand is usually information the LLM has pulled from a specific article and re-published in its own response.

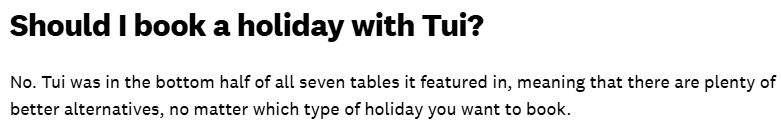

By analysing the sources, we can identify why your brand is being ranked or described a certain way. For instance, if a source ranks your brand significantly lower (e.g., 17th place), this directly explains why some LLMs may choose not to recommend you as a 'reliable' provider.

Identifying poor-performing content at the source allows us to recommend tangible actions.

In this case, for the “Most reliable package holiday companies in Britain” prompt, AI Mode was relying heavily on this article from Which? magazine, with Tui ranking only 17th in their article, ‘The best and worst package holiday providers for 2025.’

Additionally, clicking the “Read full TUI review” link on that highly-sourced page returns us an article with this extremely negative snippet of text:

Although this content isn’t shown as a source for the specific prompt, as it’s a single click away, it’s likely to impact LLM results.

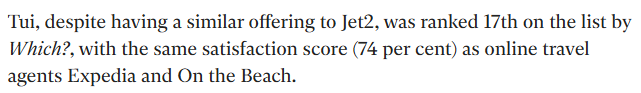

Another highly-sourced article is The Independent’s round-up article of ‘best holiday providers’. This article is actually covering the exact same data provided in the Which? article, and it goes to show how one piece of poor coverage can impact your PR negatively across multiple sources!

Identifying and Assessing the Phrasing Used by LLMs

Frequently, the wording used by an LLM is a word-for-word copy from the article it’s sourced as a reference.

Looking at Sentiment in particular, this could be highly impactful (negatively or positively) to the way your brand is understood by the consumer.

Take these two quotes, for example:

“TUI combines broad affordability with robust digital booking options and a family focus.”

“Jet2Holidays stands out for value and family-friendliness, operating from most UK airports to more than 75 destinations.”

These are taken from responses given by one of the LLMs for the same prompt we looked at previously.

If I were Jet2Holidays, I’d probably be happy with the response given. However, for TUI, I’m not sure that “broad affordability” and “robust digital booking options” are the things I want to be called out as key selling points of my business.

So, where does this content come from? Well, it’s from this article, referenced as a source - shown below - and we can see the near exact word-for-word phrasing that was used by ChatGPT.

In this case, if we were working for TUI with their AI optimisation, we could reach out to this article and suggest some alternative wording to try and shift the LLM’s focus towards something more favourable.

Recommended Actions for Improved AI Search Performance

There’s no one-size-fits-all answer to improving your performance in AI search. Instead, it requires analysis and diving into prompt-specific responses to understand why the LLMs are working in the way they are.

From the above examples, though, there are some key actions to take away that could help influence performance more positively in the future:

- Improve Foundational Data: Review customer surveys and put fixes in place to ensure your brand's performance metrics are higher in the next round of industry reports. The article cited from Which?, for example, is based on extensive customer feedback. As such, the negative impact on performance from articles such as this would need to be addressed at a much higher business level than SEO and content teams alone.

- Create Optimised Content: Develop reputable content on your own site (e.g., an About Us page) that proactively demonstrates why you are a quality business, to try and counter the negative information in the article.

- Influence Key Sources: Reach out to the website to update the content. Mentioning that the content is being leveraged by LLMs could even underscore its importance to the website owner. Alternatively, utilise positive Digital PR to change the narrative in the long run.

Key Takeaways

This level of detailed analysis, stemming from a single prompt, demonstrates how vital it is to track the data and look into the why behind every result.

So in summary,

- Tracking is Essential: Monitoring your brand's performance across key AI platforms (like ChatGPT and Google AI Overviews) is crucial, using metrics such as Visibility, Sentiment, Rank, Mentions, and Citations.

- Analyse at the Prompt Level: While general trends are useful, understanding performance gaps requires diving into individual prompt analysis to see why your brand may rank highly in one LLM but not be listed in another.

- Source Data Dictates Output: LLMs base their responses on external articles. Identifying the sources, especially those with negative rankings or sentiment (e.g., an article ranking you 17th), is key to understanding and fixing the problem.

- Influence the Narrative: The exact phrasing an LLM uses is often a word-for-word copy from its sources. By identifying these high-impact articles, you can reach out to suggest alternative wording and improve your brand's portrayal.

- Required Actions are Multi-Layered: Improving performance needs a holistic approach that includes addressing foundational business issues (like customer feedback that leads to poor industry reports), creating defensive, optimised content on your own site, and utilising Digital PR to influence key external sources.

This is the exact kind of analysis our expert team can provide using our AI search tracking service. If you’d like to find out more, please get in touch. We’d love to help you harness the power of AI search for your business.

Contact Us